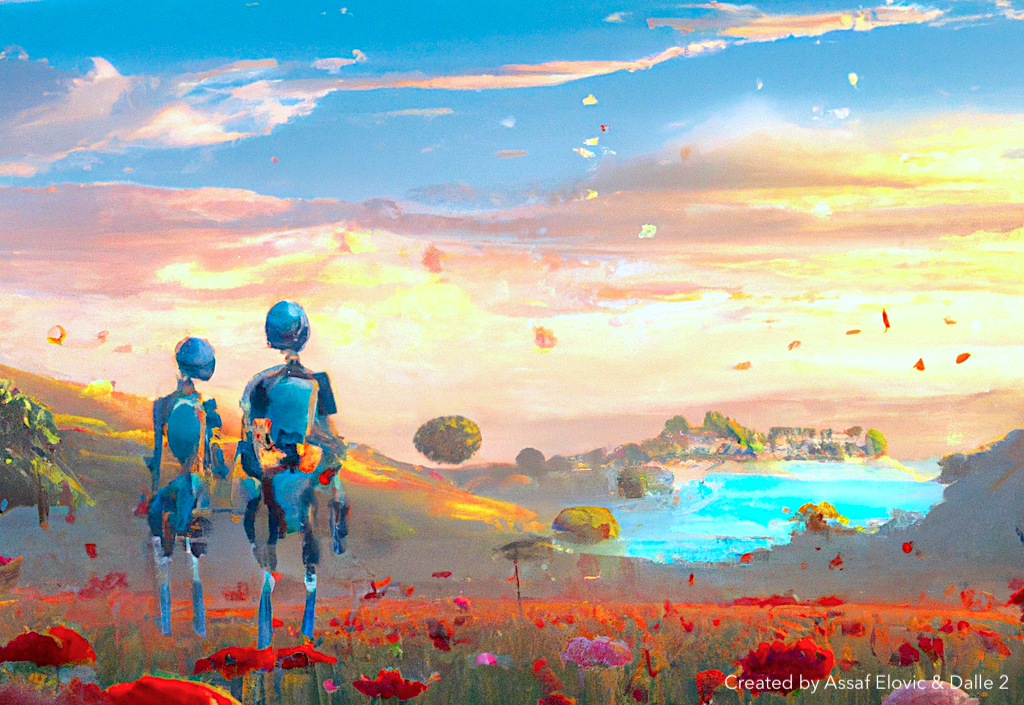

This image was created by Assaf Elovic and Dalle

Why you should care about the generative AI revolution

This summer has been a game-changer for the AI community. It’s almost as if AI has erupted into the public eye in a single moment. Now everyone is talking about AI — not just engineers, but Fortune 500 executives, consumers, and journalists.

There is enough written about how GPT-3 and Transformers are revolutionizing NLP and their ability to generate human like creative content. But I’ve yet to find a one stop shop for getting a simple overview of its capabilities, limitations, current landscape and potential. By the end of this article, you should have a broad sense of what the hype is all about.

Let’s start with basic terminology. Many confuse GPT-3 with the more broad term Generative AI. GPT-3 (short for Generative Pre-trained Transformer) is basically a subset language model (built by OpenAI) within the Generative AI space. Just to be clear, the current disruption is happening in the entire space, with GPT-3 being one of its enablers. In fact, there are now many other incredible language models for generating content and art such as Bloom, Stable Diffusion, EleutherAI’s GPT-J, Dalle-2, Stability.ai, and much more, each with their own unique set of advantages.

The What

So what is Generative AI? In very short, Generative AI is a type of AI that focuses on generating new data or creating new content. This can be done through a variety of methods, such as creating new images or videos, or generating new text. As the saying goes, “a picture is worth a thousand words”:

Jason Allen’s A.I.-generated work, “Théâtre D’opéra Spatial,”

The image above was created by AI and has recently won the Colorado State Fair’s annual art competition. Yes, you read correctly. And the reactions did not go well.

If AI can generate art so well that not only is it not distinct from “human” art, but also good enough to win competitions, then it’s fair to say we’ve reached the point where AI can now take on some of the most challenging human tasks possible, or as some say “create superhuman results”.

Another example is Cowriter.org which can generate creative marketing content, while taking attributes like target audience and writing tone into account.

In addition to the above examples, there are hundreds if not thousands of new companies leveraging this new tech to build disruptive companies. The biggest current impact can be seen in areas such as text, video, image and coding. To name a few of the leading ones, see the landscape below:

However, there are some risks and limitations associated with using generative models.

One risk is that of prompt injection, where a malicious user could input a malicious prompt into the model that would cause it to generate harmful output. For example, a user could input a prompt that would cause the model to generate racist or sexist output. In addition, some users have found that GPT-3 fails when prompting out-of-context instructions as seen below:

Another risk is that of data leakage. If the training data for a generative model is not properly secured, then it could be leaked to unauthorized parties. This could lead to the model being used for malicious purposes, such as creating fake news articles or generating fake reviews. This is a concern because GPT models can be used to generate text that is difficult to distinguish from real text.

Finally, there are ethical concerns about using generative models. For example, if a model is trained on data from a particular population, it could learn to biased against that population. This could have harmful consequences if the model is used to make decisions about things like hiring or lending.

The Why

So why now? There are a number of reasons why generative AI has become so popular. One reason is that the availability of data has increased exponentially. With more data available, AI models can be better trained to generate new data that is realistic and accurate. For example, GPT3 was trained on about 45TB of text from different datasets (mostly from the internet). Just to give you an intuition of how fast AI is progressing — GPT2 (the previous version of GPT) was trained on 8 million web pages, only one year before GPT-3 was released. That means that GPT-3 was trained on 5,353,569x more data than it’s predecessor a year earlier.

Another reason is that the computing power needed to train generative AI models has become much more affordable. In the past, only large organizations with expensive hardware could afford to train these types of models. However, now even individuals with modest computers can train generative AI models.

Finally, the algorithm development for generative AI has also improved. In the past, generative AI models were often based on simple algorithms that did not produce realistic results. However, recent advances in machine learning have led to the development of much more sophisticated generative AI models such as Transformers.

The How

Now that we understand the why and what about generative AI, let’s dive into the potential use cases and technologies that power this revolution.

As discussed earlier, GPT-3 is just one of many solutions, and the market is rapidly growing with more alternatives, especially for free to use open source. As history shows, the first is not usually the best. My prediction is that within the next few years, we’ll see free alternatives so good that it’ll be common to see AI integrated in almost every product out there.

To better understand the application landscape and which technologies can be used, see the following landscape mapped by Sequoia:

Generative AI Application landscape, mapped by Sequoia

As described earlier, there are already so many alternatives to choose from, but OpenAI is still leading the market in terms of quality and usage. For example, Jasper.ai (powered by GPT-3) just raised $125M at a $1.5B valuation, surpassing OpenAI in terms of annual revenue. Another example is Github, which released Copilot (also powered by GPT-3), which is an AI assistant for coding. OpenAI has already dominated three main sectors: GPT-3 for text, Dalle-2 for images and Whisper for speech.

It seems that the current headlines and business use cases are around creative writing and images. But what else can generative AI be used for? NFX has curated a great list of potential use cases as seen below:

NFX (including myself) believe that generative AI will eventually dominate almost all business sectors, from understanding and creating legal documents, to teaching complex topics in high education.

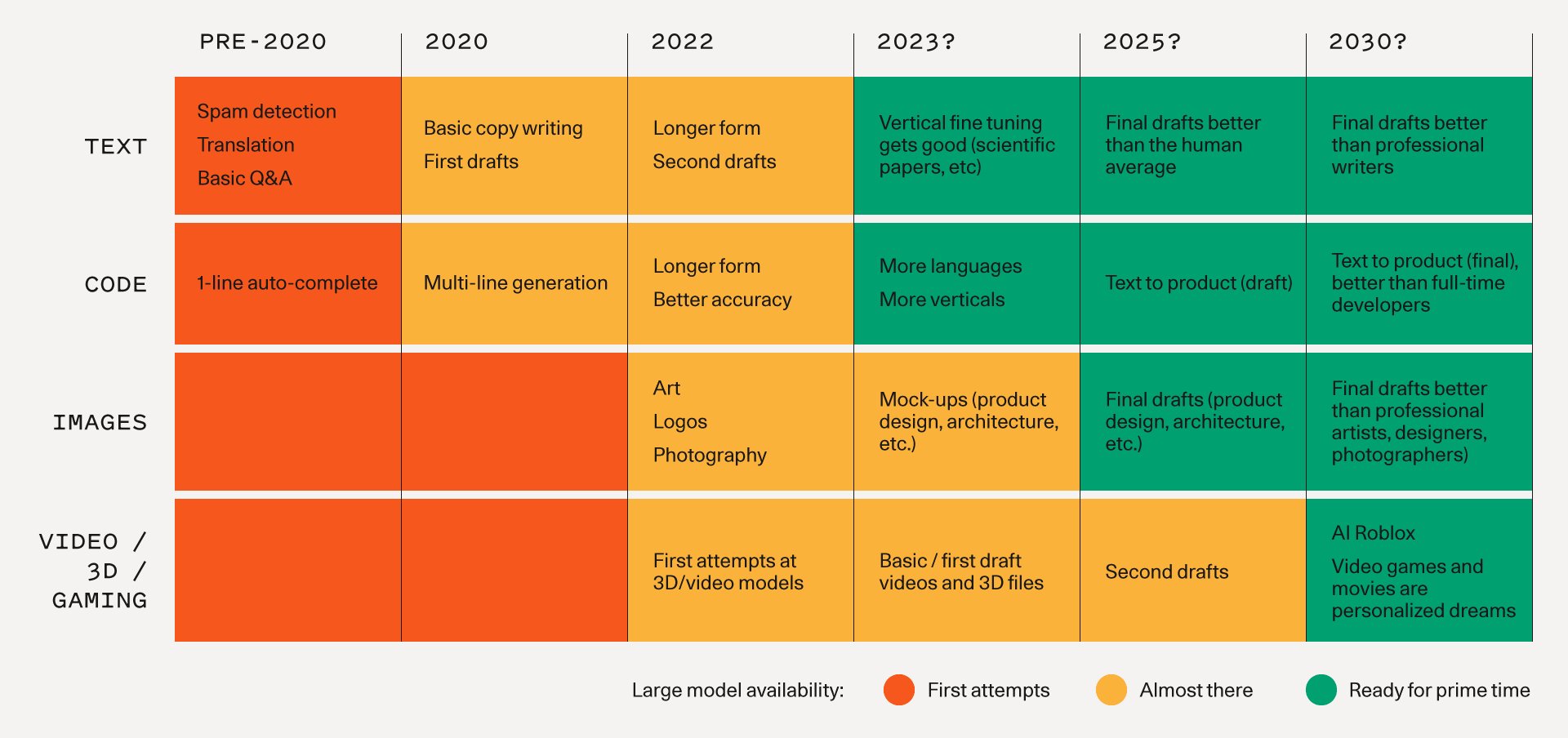

More specifically, the below chart illustrates a timeline for how Sequoia expect to see fundamental models progress and the associated applications that become possible over time:

Based and their prediction, AI will be able to code products from simple text product descriptions by 2025, and write complete books by the end of 2030.

If art generated today is already good enough to compete with human artists, and if generated creative marketing content can not be differed from copywriters, and if GPT-3 was trained on 5,353,569x more data than its predecessor only a year later, then you tell me if the hype is real, and what can we achieve ten years from today. Oh, and by the way, this article’s cover image and title were generated by AI :).

I hope this article provided you with a simple yet broad understanding of the generative AI disruption. My next articles will dive deeper into the more technical understanding of how to put GPT-3 and its alternatives into practice.

Thank you very much for reading! If you have any questions, feel free to drop me a line in the comments below!